In the next few years, food robotics will be a more than $3 billion industry, according to Research and Markets. Automation, the workforce shortage, and some exciting new developments are expanding the idea of the types of jobs that robots can do. In particular, scientists and innovative companies have been hard at work developing robots with advanced sensory system. Here’s a round-up of current and future applications for sensory robots in the food industry.

Vision

Vision was the first sensory system developed for robots, and it’s the most advanced. Consultant John Henry, of Changeover.com, recently told Food Processing that “vision is what really makes robots useful.”

Robot vision is used in the food industry in a number of ways, for example:

- Food quality grading and inspection. Robots can detect quality attributes of food, like bruising of produce, to determine if they meet quality thresholds.

- Foreign material identification. Robots can identify foreign material contaminants, like plastic and metal, in finished food products.

- Meat cutting. Robot butchers can create 3D models of meat carcasses and then cut them more efficiently and with less waste than human workers.

- Reading bar codes and labels. Robots can read bar codes, identify mislabeled products, and sort packaged products.

Smell

Every odor has a specific pattern, and now robot noses are catching up to, or even surpassing, human noses’ ability to distinguish them.

OlfaGuard, a Toronto-based bio-nanotech startup, is currently developing an E-nose that’s able to sniff out pathogens, like Salmonella and E. coli, to more quickly identify contamination and prevent food poisoning.

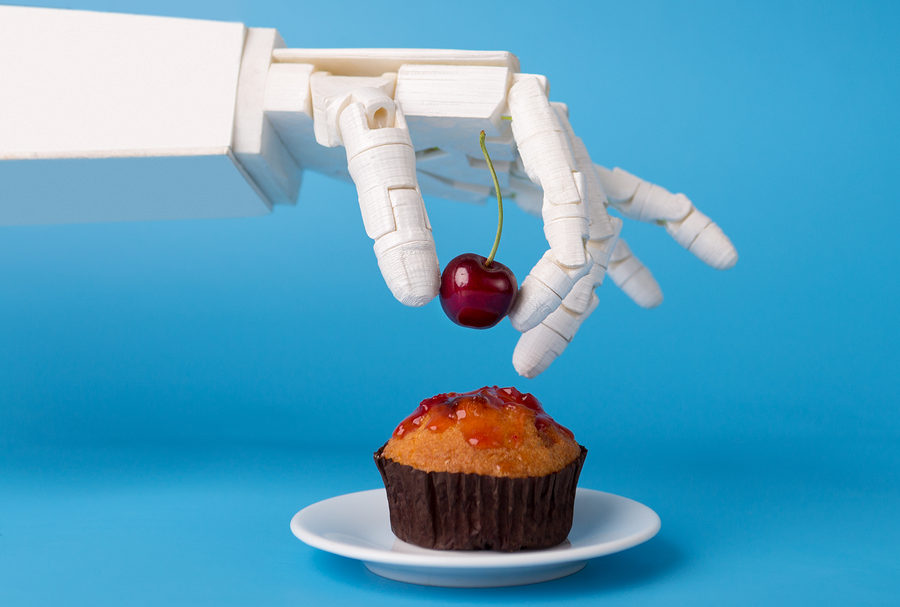

Touch

Companies like Soft Robotics have revolutionized the industry by creating robots with a grip soft enough to pick up a piece of produce without blemishing it.

In the future, this sensitivity and dexterity may go even further. Researchers at Stanford have developed an electronic glove that “puts us on a path to one day giving robots the sort of sensing capabilities found in human skin.” Currently, the sensors work well enough that a robot can touch a raspberry without damaging it. The eventual goal is for the robot to be able to detect a raspberry using touch and also pick it up.

Taste

Robots are even getting in on the tasting action. A team at IBM Research is developing Hypertaste, an artificial intelligence (AI) tongue that “draws inspiration from the way humans taste things” to identify complex liquids. It takes less than a minute for the system, which works via sensors and a smartphone app, to measure the chemical composition of the liquid and identify it by cross-referencing with a database of known liquids. Possible applications include verifying the origin of raw materials and identifying counterfeit products.

According to a report submitted to China’s central government, Chinese food manufacturers are using AI-powered taste-testing robots to determine the quality and authenticity of mass-produced Chinese food, the South China Morning Post reports. The robots, which also have eyes and noses, are stationed along production lines to evaluate the food all the way through the process. This has resulted in “increased productivity, improved product quality and stability, reduced production costs, and…technical support to promote traditional cuisine outside the country.”

Hearing

Robots can already hear and process voices (sometimes too well — I’m talking to you, Alexa). But, scientists are also busy developing robots that can discern sounds other than the human voice. Possible applications including responding to cries for help or reacting when things break in the factory. No word on anyone using this technology in the food industry yet, but if you hear about anything, let us know!